Product Import Strategies in Magento 2 – Part 1: The DTO Approach

Introduction

Product imports in Magento 2 can be challenging, especially when dealing with large datasets, complex product attributes, and media files. In this series, we’ll explore three different approaches to handling product imports efficiently. This first part focuses on the Data Transfer Object (DTO) pattern.

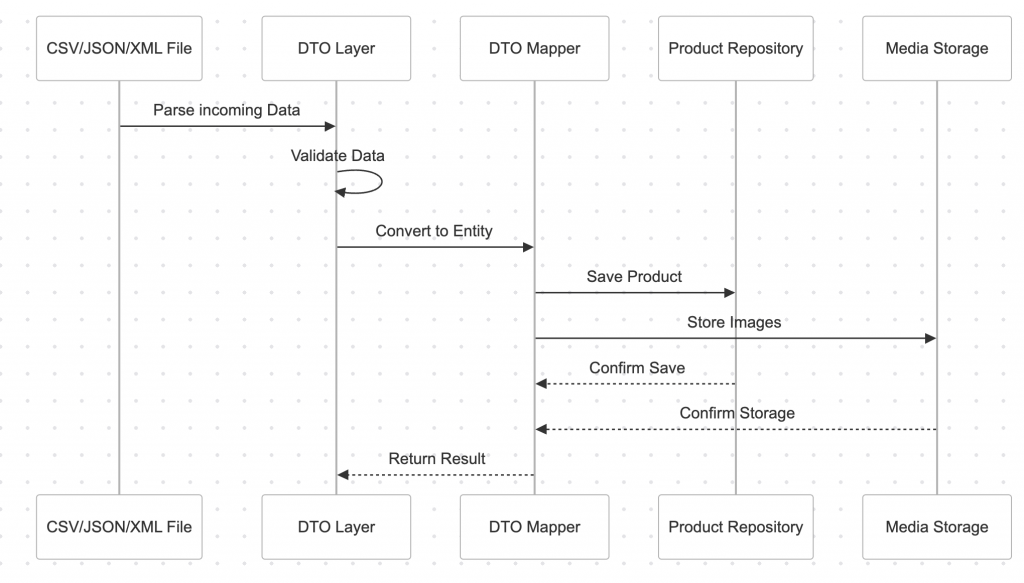

Understanding the DTO Approach

The DTO pattern provides a clean, type-safe way to handle product imports while maintaining separation of concerns and ensuring data validation at every step.

Flow diagram

Implementation

1. Product DTO Structure

namespace Vendor\Module\DTO;

class ProductImportDTO

{

private string $sku;

private string $name;

private ?float $price;

private ?int $status;

private array $categories;

private array $images;

private array $customAttributes;

public function __construct(array $data)

{

$this->validate($data);

$this->hydrate($data);

}

private function validate(array $data): void

{

if (empty($data['sku'])) {

throw new \InvalidArgumentException('SKU is required');

}

// Additional validation logic

}

private function hydrate(array $data): void

{

$this->sku = $data['sku'];

$this->name = $data['name'] ?? '';

$this->price = isset($data['price']) ? (float) $data['price'] : null;

// Additional hydration

}

// Getters and validators

}2. Import Service

namespace Vendor\Module\Service;

class ProductImportService

{

private $productRepository;

private $mediaProcessor;

private $logger;

public function importFromCsv(string $filePath): ImportResult

{

$result = new ImportResult();

try {

$csvData = $this->readCsvInChunks($filePath);

foreach ($csvData as $row) {

try {

$dto = new ProductImportDTO($row);

$this->processProduct($dto);

$result->addSuccess($dto->getSku());

} catch (\Exception $e) {

$result->addError($row['sku'] ?? 'unknown', $e->getMessage());

$this->logger->error($e->getMessage(), ['sku' => $row['sku'] ?? 'unknown']);

}

}

} catch (\Exception $e) {

$result->setFatalError($e->getMessage());

}

return $result;

}

private function readCsvInChunks(string $filePath): \Generator

{

$handle = fopen($filePath, 'r');

$headers = fgetcsv($handle);

while (($data = fgetcsv($handle)) !== false) {

yield array_combine($headers, $data);

}

fclose($handle);

}

private function processProduct(ProductImportDTO $dto): void

{

$product = $this->getOrCreateProduct($dto->getSku());

$this->mapDtoToProduct($dto, $product);

$this->processImages($dto, $product);

$this->productRepository->save($product);

}

}3. Media Processor

namespace Vendor\Module\Service;

class MediaProcessor

{

private $mediaDirectory;

private $imageUploader;

public function processImages(ProductImportDTO $dto, ProductInterface $product): void

{

foreach ($dto->getImages() as $imageData) {

$this->processImage($imageData, $product);

}

}

private function processImage(array $imageData, ProductInterface $product): void

{

$imagePath = $this->downloadImage($imageData['url']);

$this->validateImage($imagePath);

$this->optimizeImage($imagePath);

$this->imageUploader->upload([

'tmp_name' => $imagePath,

'name' => basename($imagePath)

]);

$product->addImageToMediaGallery(

$imagePath,

['image', 'small_image', 'thumbnail'],

false,

false

);

}

}Best Practices

1. Memory Management

- Process CSV files in chunks to handle large datasets

- Clean up temporary files after processing

- Use generators for file reading

- Implement garbage collection for long-running processes

2. Error Handling

class ImportResult

{

private array $successful = [];

private array $errors = [];

private ?string $fatalError = null;

public function addSuccess(string $sku): void

{

$this->successful[] = $sku;

}

public function addError(string $sku, string $message): void

{

$this->errors[$sku][] = $message;

}

public function setFatalError(string $message): void

{

$this->fatalError = $message;

}

public function getStatistics(): array

{

return [

'total_processed' => count($this->successful) + count($this->errors),

'successful' => count($this->successful),

'failed' => count($this->errors),

'fatal_error' => $this->fatalError

];

}

}3. Performance Optimization

- Disable indexes during import

- Use transactions for batch processing

- Implement caching for frequently accessed data

- Configure proper PHP memory limits

Configuration

<!-- etc/di.xml -->

<type name="Vendor\Module\Service\ProductImportService">

<arguments>

<argument name="batchSize" xsi:type="number">100</argument>

<argument name="memoryLimit" xsi:type="string">2G</argument>

</arguments>

</type>Advantages of DTO Approach

- Type Safety

- Early error detection

- Clear contract between layers

- IDE support and auto-completion

- Data Validation

- Centralized validation logic

- Strong data consistency

- Easy to extend and modify rules

- Maintainability

- Clean separation of concerns

- Easy to unit test

- Clear error handling

Limitations

- Memory Usage

- DTOs keep data in memory

- May require chunking for large datasets

- Complexity

- Additional layer of abstraction

- More boilerplate code

- Learning curve for team members

When to Use DTO Approach

This approach is ideal when:

- Data validation is crucial

- You need strong typing and IDE support

- The import process has complex business rules

- You want to maintain clean code architecture

Next Steps

In Part 2, we’ll explore the Hybrid approach, which combines programmatic product creation with CSV data enrichment. We’ll see how this method can provide more flexibility while maintaining data integrity.

Stay tuned for Part 2 of our series where we’ll dive into the Hybrid approach for product imports in Magento 2.