Agile teams are increasingly exploring generative AI to speed up test automation. Imagine turning a Jira user story with clear acceptance criteria into runnable Cypress or Pytest tests within minutes. In this post, we’ll walk through an end-to-end workflow for using AI to automatically generate functional tests during the Agile development lifecycle. We’ll cover the process from writing good requirements in Jira, crafting effective AI prompts, generating and reviewing test code, to integrating those tests into a CI/CD pipeline (using GitHub Actions and Allure reporting). Along the way, we’ll discuss best practices, benefits, and limitations of this approach.

The End-to-End Workflow: From Jira Story to CI/CD Pipeline

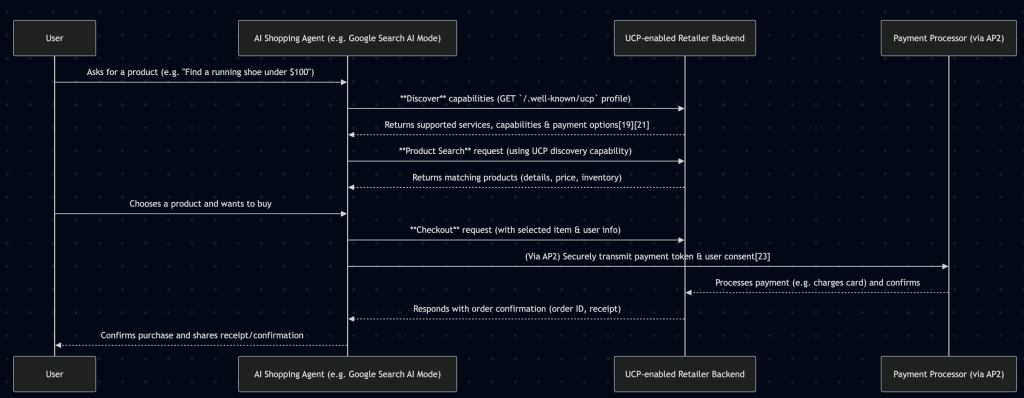

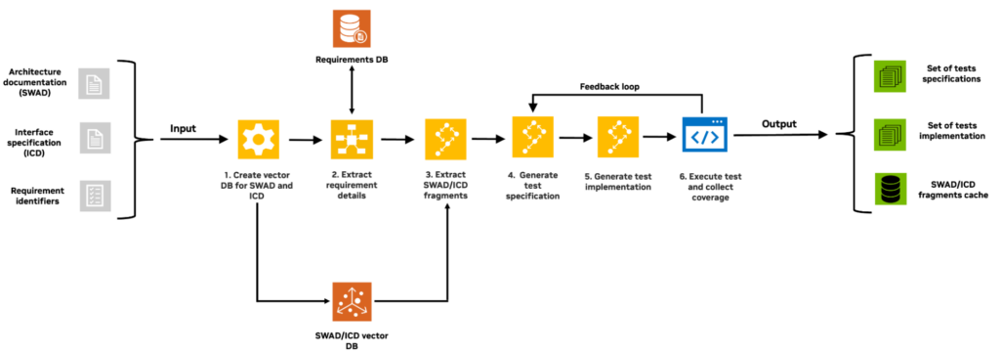

Figure: An example of an AI-driven test generation pipeline. AI can analyze requirements and documentation to generate test code, with feedback loops to improve coverage. In our context, the workflow links Jira, a generative AI, and your CI system in a seamless loop.

Let’s outline the high-level process in an Agile scenario:

- Write clear Jira tickets – A developer or product owner writes a user story in Jira with well-defined acceptance criteria.

- Generate tests with AI – A QA engineer crafts an AI prompt using the Jira story details. The generative AI (e.g. ChatGPT) produces functional test code (for example, a Cypress end-to-end test or a Pytest API test).

- Review and refine – The team reviews the AI-generated test, making edits as needed to align with the intended behavior and application specifics.

- Integrate into version control – The approved test code is committed to the project’s repository (e.g. on GitHub).

- Run in CI/CD – On the next build, GitHub Actions (or another CI tool) runs the new tests. Results are collected and published in an Allure report for the team to review.

- Iterate – If the test fails, the team determines whether the application has a bug or the test needs adjustment. They fix and repeat, possibly refining the AI prompt for better results next time.

This cycle can significantly accelerate testing. NVIDIA, for example, built an internal AI system that generates tests from specs, saving teams “up to 10 weeks of development time” in trials. Even without such advanced tooling, integrating AI into your test workflow can dramatically speed up test authoring, allowing teams to focus more on complex scenarios.

Start with Clear Requirements and Acceptance Criteria in Jira

Every great test starts with a clear requirement. Jira is often the source of truth for user stories in Agile teams. To leverage AI, ensure that Jira tickets have:

- Specific acceptance criteria: Write concrete scenarios or examples. Instead of “login works correctly,” specify criteria like “Given a valid user, when they enter correct credentials, they should be redirected to the dashboard and see a welcome message.”

- Structured format: Consider using bullet points or even Gherkin syntax (Given/When/Then) in the acceptance criteria. Structured scenarios are easier for an AI to parse and turn into test steps.

- No ambiguity: Clarify any business terms or outcomes. If the story says “user data is saved,” specify where (database? profile page?) and how to verify it. The AI is only as good as your description – vague requirements in Jira lead to vague tests from the AI.

Why is this important? Generative models analyze the text you give them. AI algorithms can analyze requirements or user stories to automatically generate new test cases, but they can’t read your mind. The more explicitly you spell out the expected behavior and edge cases, the better the AI can craft meaningful tests. Think of it as writing the acceptance criteria as if they were a manual test case – because essentially, the AI will use them to write an automated one.

Crafting Effective AI Prompts for Test Generation

Once you have a solid Jira story and acceptance criteria, the next step is turning that into a prompt for your AI of choice. Prompt engineering is key to getting useful results. Here are some tips for crafting a good prompt:

- Provide context: Start by telling the AI its role. For example: “You are an expert QA engineer.” This sets the stage for a high-quality answer.

- Include the user story details: Copy the relevant part of the Jira ticket into the prompt. For example: “Here is a user story: As a shopper, I want to reset my password so that I can regain account access. Acceptance criteria: 1) Given I am on the Forgot Password page, when I submit a valid email, then I see a confirmation message. 2) An email is sent to the address with reset instructions.”

- Specify the test framework and scope: Be explicit about what kind of test you need. For a front-end scenario you might say: “Generate an end-to-end test in Cypress (JavaScript) for the above acceptance criteria.” For backend logic: “Generate a Python Pytest that calls the password reset API and verifies the response.” The AI can write many types of tests, so guide it to the right stack and framework.

- Ask for structure or comments if helpful: You can instruct the AI to include comments or to structure the test in a certain way. e.g. “Include comments for each step” or “Use BDD-style naming for test cases.”

- Limit scope: If the story has multiple acceptance criteria, you can prompt one at a time (e.g., “Write a test for scenario 1”). This can make the output more focused and easier to follow.

Prompt template example: (This is a generic template you can adapt.)

**System**: You are a senior test automation engineer using Cypress.

**User**:

We have the following user story and acceptance criteria:

**User Story:** As a shopper, I want to reset my password so that I can regain account access.

**Acceptance Criteria:**

- [1] Given I am on the "Forgot Password" page, when I submit a valid email address, then I should see a message confirming that a password reset link was sent.

- [2] The system sends a password reset email to the address, containing a one-time reset link.

**Task:** Write an automated test in Cypress (JavaScript) that covers scenario [1]. Use best practices for Cypress and include brief comments explaining each step.

In the above prompt, we explicitly provided the context, the exact acceptance criteria, and the required output format (a Cypress test). This reduces the chance of the AI going off-track. Generative models work best when the instructions are precise – the more guidance you give, the closer the test will match your needs.

Generating Functional Tests: Cypress and Pytest Examples

Now for the exciting part: generating the test code. With a well-crafted prompt, a generative AI (like ChatGPT or GitHub Copilot) can output a functional test script within seconds. Let’s look at what that might look like in practice for both a web UI test (Cypress) and an API/logic test (Pytest).

Example 1: Cypress end-to-end test – Suppose our Jira story was about the password reset scenario described above. An AI-generated Cypress test might look like this:

// AI-generated Cypress Test for Password Reset

describe('Password Reset', () => {

it('shows a confirmation after submitting a valid email', () => {

cy.visit('/forgot-password'); // Navigate to the Forgot Password page

cy.get('input[name="email"]').type('[email protected]'); // Enter a valid email

cy.get('button[type="submit"]').click(); // Submit the form

// Verify the success message is displayed

cy.contains('password reset link has been sent').should('be.visible');

});

});

This is a high-level example. The AI inferred the selector for the email input and button from typical patterns (these might need tweaking to match your app). It also checked for a confirmation message containing a phrase like “password reset link has been sent,” drawn from the acceptance criteria. In practice, you might need to adjust selectors or text to exactly match your application. However, even a basic generated script provides a head start, covering the happy path in seconds rather than hours.

Example 2: Pytest API test – Consider a backend function for password reset with an API endpoint. A prompt might ask for a Pytest test to validate that endpoint. The AI-generated test could be:

# AI-generated Pytest for Password Reset API

import requests

def test_password_reset_api():

# Define the API endpoint and payload

url = "https://myapp.com/api/password-reset"

payload = {"email": "[email protected]"}

# Call the API

response = requests.post(url, json=payload)

assert response.status_code == 200

data = response.json()

# Verify the response message matches expectation

assert data.get("message") == "Password reset email sent."

# (Optional) Verify that no error flag is present

assert data.get("error") is None

Here the AI created a simple API call using requests and checked the status code and response content according to the acceptance criteria (e.g., expecting a success message). If your project uses a test client or different library, you’d prompt accordingly (maybe instruct the AI to use the api_client fixture for Django/Flask, etc.). Again, the output might need slight modifications, but it saves the tedium of writing boilerplate.

In both examples, the AI accelerates the creation of boilerplate and basic assertions. It’s especially useful for covering routine scenarios: an AI can produce “happy path” tests or straightforward edge case tests quickly. One team reported that about “70% of the scenarios are valid” when using ChatGPT to generate test ideas, significantly reducing the time spent on creating those scenarios manually. The remaining 30% might need fixes or might be off-base – which is why the next step is crucial.

QA Review: Keeping Humans in the Loop

Don’t skip the review! AI-generated tests are a starting point, not the finish line. Treat them like code written by a junior developer – useful, but in need of oversight. Here’s how to incorporate a QA review:

- Verify correctness: Does the test actually reflect the acceptance criteria and overall requirement? Check that assertions align with expected outcomes. Ensure no acceptance criterion was overlooked or misinterpreted.

- Check for hallucinations: Generative models sometimes make up details. For example, the AI might use a CSS selector or API field that doesn’t exist in your app. Remove or correct these. Always cross-check IDs, element texts, and API fields against the real application.

- Run the test locally: Execute the Cypress test in a browser or run the Pytest. If it passes and the feature is implemented, great. If it fails, determine if the failure is due to a bug in the application or an issue with the test. This is actually a bonus – a failing AI-generated test could mean it detected a legit defect (e.g., maybe the confirmation message text is slightly different than expected, indicating a documentation mismatch).

- Improve and refactor: Clean up the code style to match your project’s conventions. For instance, you might factor out repetitive code or use fixtures for setup if the AI wrote everything inline. Make the test robust (e.g., add waits or better selectors in Cypress to avoid flakiness).

- Augment with edge cases: The AI might give you the main scenario. Consider prompting it (or using your expertise) to generate additional tests, like negative cases. For example, “What if the email is not registered?” You can either prompt the AI for that scenario or write it yourself based on the pattern of the first test.

By keeping QA engineers in the loop, you ensure accuracy and reliability of your test suite. Remember, “LLMs may hallucinate or miss context” – your expert eye is needed to catch those issues. The goal is to save time on boilerplate and grunt work, not to replace human judgment. In fact, reviewing AI outputs can become part of the QA workflow: treat the AI as an assistant that produces a draft, which you then refine.

Integrating AI-Generated Tests into CI/CD (GitHub Actions & Allure)

After the tests have been reviewed and merged into the repository, they become part of your continuous integration cycle. Here’s how to make the most of it:

- Commit to the repo: Once you’re satisfied with the test, commit it on a branch. Ideally, tie it to the feature or user story (some teams even automate linking the test case to the Jira ticket for traceability).

- Continuous integration: With a platform like GitHub Actions, the new tests will run on each push or pull request. For example, if you have a Cypress test suite, you might use the official Cypress GitHub Action or a custom workflow to run

npm run cypress:run. For Pytest, you’d run your Python test suite as usual. Make sure your CI environment has the necessary configuration (browsers for Cypress, etc.). - Allure reporting: To keep the team in the loop on test results, integrate Allure or a similar reporting tool. Allure generates an attractive report of test outcomes, including steps, screenshots, and logs. You can configure a GitHub Action to upload and publish the Allure report automatically – for instance, using the Allure Report Action to deploy the report to GitHub Pages. This means that after each run, anyone (developers, QA, managers) can open a web link to see which tests passed, failed, and what the failure details were.

- Feedback to Jira: In a mature setup, you might even automate feedback to Jira. For example, if a test associated with a Jira story fails in CI, the Jira issue could be flagged. Some Jira plugins (like Xray or RTM for Jira) support linking tests to requirements and can update testing status automatically. While this is beyond pure AI generation, it completes the loop by tying results back to your project management.

Using CI/CD ensures that AI-generated tests run consistently and catch regressions. One caution: if an AI-generated test is flaky or based on assumptions, it could cause false alarms. Monitor the first few runs. If a test fails due to environment or data issues (not an actual bug), consider fixing the test logic or temporarily quarantining the test. Over time, as confidence grows, these AI-authored tests become just “the test suite” – maintained and improved like any other tests.

Best Practices and Prompt Engineering Tips

Adopting AI for test generation requires some adjustments in team practices. Below are best practices and tips to get the most out of this approach:

- Use examples in prompts: If you notice the AI output isn’t following your style, include a small example in the prompt. e.g. “Here is a sample test format” followed by a brief template. The AI will mimic the style.

- Keep prompts and conversations focused: Work on one test or feature per prompt (especially when using the chat interface). Too much context can confuse the model. Start fresh chats for distinct topics to avoid bleed-over from previous discussions.

- Leverage GPT-4 or fine-tuned models for complex cases: If you have access, GPT-4 often produces more accurate and coherent code than GPT-3.5. For very domain-specific applications, consider fine-tuning or providing more context about your domain in the prompt.

- Security and IP considerations: Be mindful of what you share with a third-party AI service. Avoid pasting large chunks of proprietary code or sensitive data. Since we’re focusing on tests from requirements, you’re likely safe – but always follow your company’s policies. There are self-hosted or on-prem LLM solutions if data governance is a concern.

- Continuous improvement: Maintain a wiki or guide for your team’s prompt patterns that work well. As you learn from failures (e.g., a prompt that led to a weird test), update your approach. Over time, your prompt engineering skills will improve, and so will the quality of initial AI outputs.

- Combine AI approaches: Remember that generative AI can help beyond just writing code. It can suggest test scenarios you might not think of. Some teams use it for brainstorming: “List edge cases for the login feature”. AI can also assist with test data generation or converting manual test cases to automated ones. Be creative in piloting new ideas.

By following these practices, you establish a human-AI partnership in testing. The AI handles the repetitive parts and suggests ideas, while the human testers guide the AI and ensure the resulting tests are valid.

Benefits vs. Limitations of AI-Generated Tests

Like any new technology, using generative AI for test creation comes with pros and cons. Let’s summarize the key benefits and limitations:

✅ Benefits:

- Speed and efficiency – An AI can draft test cases in seconds, giving you a rapid starting point. This accelerates the testing phase and can be a boon when test coverage is lagging behind in a sprint.

- Reduced grunt work – Boilerplate code and repetitive tests (like similar CRUD operations or form validations) can be generated quickly, freeing up engineers to focus on complex testing scenarios.

- Improved test coverage – The AI might suggest tests for edge cases that developers/QA didn’t consider. This can lead to broader coverage, especially if you prompt for “additional negative tests” or “corner case scenarios.”

- Onboarding and learning – Junior testers or developers can use AI-generated tests to learn proper structure and practices. It’s like having an example library at your fingertips.

- Consistency – If you establish a standard prompt, the AI’s output will follow a consistent pattern (naming conventions, structure), which can unify the style of tests across a team.

⚠️ Limitations:

- Potential inaccuracies – AI doesn’t truly understand your application. It might assume things that aren’t true, leading to incorrect tests. Overconfidence in generated code is risky. Always verify each test’s logic.

- Maintenance burden – If an AI-generated test is wrong or flaky, maintaining it can cost time. Also, when the application changes, these tests need updating like any other. If the team treats them as “second-class” and doesn’t maintain them, they’ll quickly become obsolete.

- Context limitations – The AI only knows what you tell it (or what it was trained on generally). It might not handle complex stateful scenarios well (e.g., multi-user interactions, external integrations) without extensive prompting. It has limited ability to infer underlying app state or data dependencies.

- No replacement for human creativity – Test design isn’t only about checking boxes in acceptance criteria. Exploratory testing, UX nuances, and unpredictable user behavior are areas where human insight is irreplaceable. AI can help with known patterns, but it won’t invent clever tests that nobody thought to write (at least not reliably).

- Hallucinations and format issues – Sometimes the AI may output syntactically incorrect code or use outdated API patterns. For example, it might produce a Cypress command that doesn’t exist or a deprecated Pytest usage if it’s drawing from older training data. These issues are usually easy to fix, but they underscore why human review is mandatory.

In summary, generative AI is a powerful assistant, but not an infallible one. Use it to augment your testing, not to replace the critical thinking and expertise of your QA team. As one LinkedIn tech lead quipped, LLMs in testing are a “game-changer or just hype” – the reality is somewhere in between, with great potential if used wisely.

Getting Started: How to Pilot AI-Generated Testing in Your Team

Interested in trying this out? Start small and learn as you go. Here’s an action plan to pilot AI-generated testing in your workflow:

- Select a candidate project or feature: Pick a relatively well-defined, low-risk user story in an upcoming sprint. Ideally, choose something that is important enough to have good acceptance criteria, but not so critical that any testing hiccup would be catastrophic.

- Ensure clarity of requirements: Before you even involve the AI, have the team review the Jira story. Are the acceptance criteria testable and explicit? If not, improve them. This step benefits your process regardless of AI usage.

- Use a known AI tool initially: The easiest way to start is using ChatGPT via the web interface or an IDE plugin like GitHub Copilot. You don’t need full automation from day one. Copy the acceptance criteria, paste into ChatGPT with a prompt like we discussed, and see what it gives. Treat it as an experiment.

- Review collaboratively: Take the AI output to your next QA sync or developer peer review. Evaluate it together. This will both train your team to spot AI quirks and also build buy-in. It’s fun to see what the AI got right or wrong, and it often sparks discussion about the requirements themselves.

- Incorporate into version control carefully: Maybe create a feature branch or a draft pull request with the new test. Mark it clearly as an AI-generated test in the description. This isn’t to shame the AI, but to let reviewers know they might encounter non-obvious mistakes. Once approved, merge it.

- Monitor in CI: Run the test in your CI pipeline. If you’re using GitHub Actions, check that the test passes consistently. If it fails, diagnose why. This will teach you either something about your app or about how to prompt better next time. Publish the results with Allure or even a simple CI log – the key is to make sure it’s visible whether the test is stable.

- Gather feedback: After a sprint or two of using AI for test generation on a few stories, have a retrospective. Did it save time? Are developers and QAs confident in the tests? Did it catch any bugs or regressions? Use these insights to adjust. Maybe you’ll find it’s great for front-end UI tests but not as useful for certain backend scenarios (or vice versa).

- Consider tools and integration: If the pilot is promising, you can look into deeper integration. For example, Jira plugins (as of 2025 there are a few on the marketplace) can generate test cases right inside Jira. Or you might script something using the OpenAI API to automate pulling acceptance criteria from Jira and pushing code to a repo (the Medium tutorial by Vidya shows a basic example of this pipeline). These are nice-to-have once you’ve proven the concept manually.

- Educate and establish guidelines: Create a short internal guide (maybe in Confluence) for how to use AI in testing. Include your prompt templates, do’s and don’ts, and a channel for the team to share experiences. As more people try it, the collective knowledge will grow.

Starting with these steps ensures that you pilot the approach safely and learn what works for your context. Many teams report that using AI for test generation is a “productivity boost” when used judiciously. By involving the team in the trial, you also address cultural aspects – people often fear new tech like AI, but seeing it in action demystifies it and shows that it’s a tool, not a replacement for their jobs.

Conclusion

Generative AI is poised to become a valuable ally in software testing. In an Agile development world, where we strive to ship fast and continuously, AI-generated tests can keep pace with rapid code changes, ensuring quality isn’t left behind. We explored how starting from a Jira user story, you can prompt an AI to produce functional tests, and then integrate those into your CI pipeline with tools like Cypress, Pytest, GitHub Actions, and Allure.

The key takeaways? Preparation and validation. Invest time in clear requirements and good prompts – you’ll get far better tests as output. And always loop back with human insight – review what the AI creates, and use your team’s expertise to polish it. When done right, you get the best of both worlds: the speed of automation and the wisdom of experienced testers.

As with any new approach, there will be bumps along the way. Some AI suggestions will be off-target (or downright amusing), and you’ll refine your strategies to handle that. But even in its current state, this technology offers tangible productivity gains. It can “accelerate test authoring” and reduce “repetitive effort”, allowing your team to focus on high-value testing and quality improvements.

Call to action: Why not give it a try in your next sprint? Take one upcoming user story and see what tests an AI can generate for you. You might be surprised by how much of the heavy lifting it can handle. And if you’ve already been experimenting with AI in QA, share your experiences (in the comments or with your network) – the testing community is eager to learn what works and what doesn’t. By collaborating and sharing knowledge, we can all harness this new wave of AI to build faster, better tests and, ultimately, better software.

Sources:

- Vidya, “OpenAI ChatGPT and Cypress Integration,” Medium (Feb 17, 2025) – Tutorial on fetching Jira stories and using ChatGPT to generate test scenarios.

- Ajay Kulkarni, “Using ChatGPT & LLMs to Generate Unit and E2E Tests: Game-Changer or Just Hype?” LinkedIn (Apr 27, 2025) – Insights on how teams use LLMs for testing and pros/cons.

- Leodanis Pozo Ramos, “Write Unit Tests for Your Python Code With ChatGPT,” Real Python (2023) – Benefits of AI in test writing and the importance of reviewing generated tests.

- Halina Cudakiewicz, “AI-driven test management: Streamlining your QA workflow,” Deviniti Blog (Apr 03, 2025) – Overview of AI in test case generation and Jira integration tools.

- Max Bazalii, “Building AI Agents to Automate Software Test Case Creation,” NVIDIA Technical Blog (Oct 24, 2024) – Case study of NVIDIA’s HEPH tool for AI-driven test generation and its impact.